Timed tests are a major source of stress for students and can often cause many students to underperform. While the stress and pressure from time limits can improve students’ focus and productivity, for many students, severe time limits don’t allow them to fully demonstrate their knowledge. In education, speed has long been equated with intelligence and seen as a sign of subject mastery, so teachers have designed their tests around this idea. But are the fastest students necessarily the smartest students, and are timed tests the most effective means to measure student knowledge and intelligence? Every student is unique, and there are different types of test takers, just as there are different types of learners. From study to study, none have found direct correlations between test-taking pace and accuracy. Instead, other factors like disability, gender, age, and language proficiency all affect students’ test-taking pace but not necessarily their performance. Placing time limits on tests disproportionately affects students’ performance and thus is an ineffective means to assess students’ academic knowledge and intelligence. Therefore, schools should take steps to allot more time during tests for all students to better assess their true knowledge and grow their potential.

Inefficacy of Time Limits

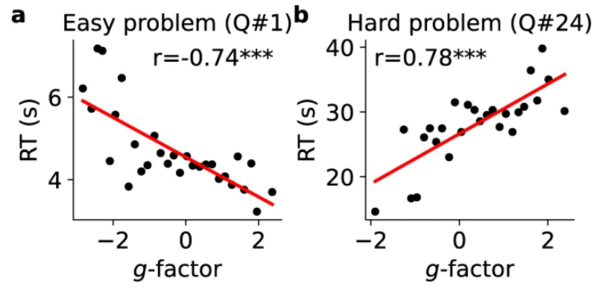

It is a common misconception in education that students with mastery of a topic will solve problems faster. However, placing severe time constraints on tests does not effectively assess a student’s fluency in a subject. Students with higher intelligence scores solve easy problems faster but are consistently slower when solving difficult problems (Schirner, et al., 2023). A study on the relationship between brain network structure and intelligence determined intelligence scores by a series of tests that measured factors such as fluid intelligence (the ability to solve new problems independent of previous experience) and reaction time (the individual’s processing speed) to calculate an overall, or general, intelligence. In an untimed test where questions were ordered by increasing difficulty, participants with higher general and fluid intelligences were found to have higher processing speeds, but this didn’t correspond to a faster testing pace. In fact, “a meta-analysis over 172 studies and 53,542 participants reported strong negative correlations between general intelligence and diverse measures of RT [reaction time],” but for more difficult questions, the data showed a positive correlation (see Appendix A) (Schirner, et al., 2023). These high-scoring individuals were quicker to answer the first set of simple questions but were slower to answer questions as the difficulty increased. The study found that slower response times correlated with higher accuracy on test questions, showing that students will perform better when taking more time to answer questions (Schirner, et al., 2023). For tests that aim to assess students’ intelligence by their ability to solve difficult problems rather than their speed at solving simple ones, time limits should therefore be ample or abolished completely.

Timed exams reward quick thinking, a skill not necessarily correlated with the types of students who have the most knowledge or highest intelligence. Instead of assessing student knowledge, timed tests evaluate how well a student can reason under stress and guess answers quickly, making timed tests an ineffective measure of actual student performance (Gladwell 2019). To use Gladwell’s metaphor, timed tests reward the hare over the tortoise, or the quick thinker over the slow, methodical one. A timed test aimed at forcing students to rush instead “favors those capable of processing without understanding,” i.e., hares, not tortoises (Gladwell 2019). This test format evaluates how well students can solve difficult problems quickly, not how well they can solve difficult problems. The issue with this is that in most careers, success is not determined by whether a person is a tortoise or a hare, but rather how hard and passionately they work. This means that important standardized tests like the ACT and LSAT shouldn’t distinguish one group of students as the better tester because the world needs both, so tests should reflect that and assess both.

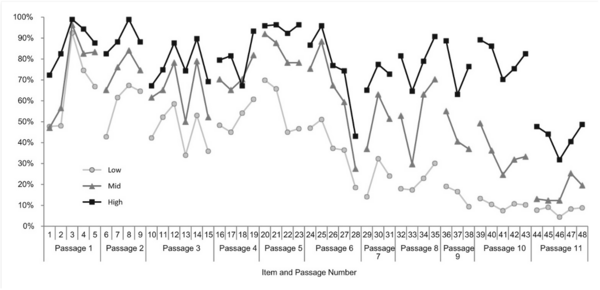

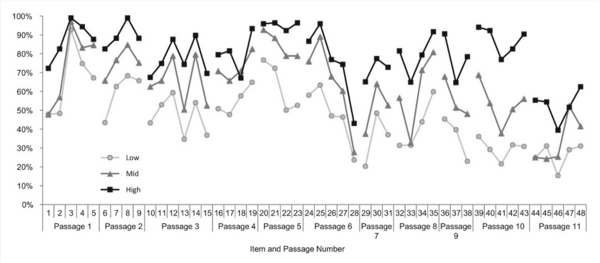

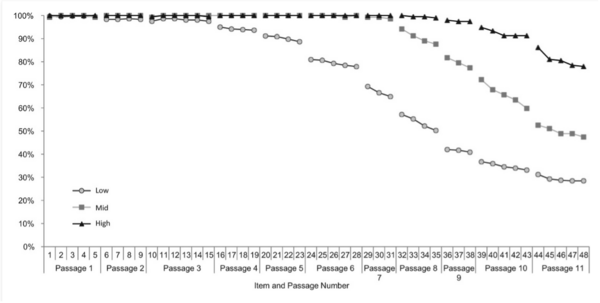

By not assessing both methods of thinking, severe time limits, those which cause most students to not finish a test, arbitrarily and unfairly increase the performance gap between high and low-scoring test-takers, particularly between fast and slow readers. Looking at a multiple-choice reading comprehension test with fixed time limits, a study from the Journal of Psychoeducational Assessment analyzed the impact that non-attempted questions had on student performance. The data showed a steep drop-off in the percentage of correct responses toward the end of the test, most notably for the bottom third of scorers during the final third of the test (see Appendix B). This decrease in accuracy was steeper and began earlier in the test for lower-scoring test-takers, a trend caused by two factors: the increase of both question difficulty and percentage of unanswered questions throughout the test (Clemens et al., 2014). By looking at the correct response percentage for only the attempted test questions (see Figure B2), the performance decline throughout the test was less pronounced, particularly for the lower-third of scorers. This shows that non-attempted questions had a more significant effect on the performance of lower-scoring test-takers than the increasing difficulty of the problems. The high rates of non-attempted questions can be attributed primarily to the time limits placed on test-takers, but also to other problems caused by long, continuous reading assessments, which have high rates of students losing focus or giving up entirely (Clemens, et al., 2014). When there are such high rates of incompletion, the tests do not accurately assess the students’ reading comprehension skills or their ability to answer accurately but rather how quickly they can read and how many questions they can answer in a short time. Such tests only show how well the students can take tests and not their knowledge of the material or intelligence.

Overall, this study shows that more consideration must be made over the effectiveness of timed tests. Examiners need to consider multiple factors when there are low test completion percentages. One such consideration would be for students whose first language differs from the language of the test. These students are typically not as fluent or quick of readers. This means non-native speakers will perform disproportionately poorly on tests of reading comprehension (Gernsbacher, et al., 2020). Thus, when time restrictions on tests are imposed, performance gaps increase to the detriment of slow thinkers and slow readers, regardless of their overall intelligence.

Disproportionate Demographic Effects

When increased time is given on tests, the gaps and disparities seen in test performance between demographics close, leveling the playing field to make tests more equitable for all. For example, increasing time limits decreases the gender disparities seen in subjects such as mathematics and reading comprehension (Grant 2023). Statistically, boys outperform girls in areas of mental rotation and spatial ability, which refers to the ability to mentally transform and rotate 3D objects. This skill helps in STEM fields, specifically problem solving in mathematics and design and graphical skills in engineering. For measuring this mental rotation ability, the Purdue Spatial Visualization Tests: Visualization of Rotations (PSVT:R) is the leading test in STEM education research. Under severe time constraints for this test (less than 30 seconds per multiple-choice question), males scored significantly better than females. But, when given more time on the PSVT:R (around 40-60 seconds per question), both males and females’ scores increased, while the gap between their scores decreased (Maeda and Yoon Yoon, 2013). More generous time limits for STEM-related tests, then, equalize the scores between males and females, which could encourage more high-achieving women who are disadvantaged by these inadequate time limits to pursue education and careers in STEM.

Males also perform better than females under intense time pressure, but this advantage also decreases as more time is given on tests and the pressure on the student is lessened. A study from the Journal of Economic Behavior & Organization analyzes the effects that continuous assessment, a class grading method that bases grades on midterm exams in addition to the final, has on student performance. The study found that for high-pressure, multiple-choice exams (the final exam in this case), females tend to omit answers to more questions and subsequently underperform relative to their male peers (Montolio and Taberner, 2021). The researchers attribute these results to differing test-taking approaches, as females may experience more test anxiety, resulting in decreased levels of confidence, and may be less prone to take risks by guesswork, a tendency which only increases for higher pressure tests. As a result, “male students are found to outperform female students when sitting high-stake exams (0.132 s.d. [standard deviations]). However, as the stakes at hand decreases, the gender gap in favour of male students is narrowed until it is mitigated and ultimately, in the lowest stake scenario, reversed in favour of female students (0.08 s.d.)” (Montolio and Taberner, 2021). This standard deviation data, which quantifies the relative difference between average scores, shows that the gender performance gaps shrunk for lower-pressure exams. This result demonstrates that females perform better under less pressure, as opposed to males performing worse, because both males and females scored better on average for lower-pressure exams, but females’ scores improved significantly more than males’ (0.153 s.d. compared to 0.018 s.d.) (Montolio and Taberner, 2021). With more time given for exams, there is less pressure put on the students. This means that males and females both are more likely to perform to their maximum potential, closing gender gaps in academic performance.

Giving more time for exams helps all students perform to their maximum potential, especially for persons with learning disabilities, who perform proportionally better compared to non-disabled persons when more time is allocated. The idea behind maximum potential is that the more time allowed on a test, the more equitable the test becomes because it enables all students to perform to their best ability. This makes the test a better assessment overall (Gernsbacher, et al., 2020). Furthermore, by the Differential-Boost Hypothesis, while all students are aided by extra time on exams, students with disabilities benefit more (Gernsbacher, et al., 2020). Not all persons with disabilities can get access to testing accommodations and alternative learning plans due to factors such as the cost of documentation and the stigma surrounding less visible disabilities. This means that increasing time limits for all students is the best way to remedy this disadvantage. This increased time helps equalize testing performance for everyone with slower thinking disadvantages, which holds true for people of older age.

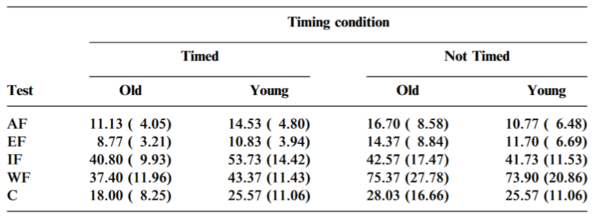

With more time allowances, older adults perform just as well as younger adults when assessing divergent thinking for individuals with similar experience and education levels. Divergent thinking refers to the ability to come up with multiple possible and correct solutions to a single problem. This type of thinking correlates to creativity and overall cognitive ability. Older age is often attributed to cognitive decline, but the results of a study from Educational Gerontology showed that although middle and older-aged adults performed worse during timed divergent thinking tests, they performed slightly better during untimed power tests (power tests referring to tests with sufficient time to answer all questions) (see Appendix C). These results can be attributed to processing speeds slowing with age, while overall cognitive ability remains similar (Foos and Boone, 2008). Thus, time restrictions on tests disadvantage older-aged persons pursuing education and don’t assess a person’s cognitive ability as effectively as untimed tests.

Discussion

It is important to note, however, that giving students more time on tests does not automatically produce better test scores. Rather, taking tests free of time pressure, i.e., power tests, is what significantly improves student performance (Gernsbacher, et al., 2020). In some cases, speed may be the best indicator of mastery in a subject. In these cases, tests should be designed with speed in mind and impose time restrictions accordingly. In most subjects, though, mastery is best indicated by the methodical acquisition of conceptual knowledge rather than the speed of memorization (Gernsbacher, et al., 2020). Examinations that are designed to test for speed should still consider how to make the tests as equitable as possible and should only administer severe time restrictions when necessary for knowledge assessment or preparation for certain careers. A common reason for these severe time limits is because instructors want to test students on the most material to best assess their students’ knowledge. So, they write longer tests with more questions rather than design better, multifaceted questions. The problem here is that these longer tests are still limited by finite testing times, which is the primary issue with administering untimed power tests: that practical considerations must be made to account for the finite time periods in which tests are given.

Making these practical considerations, instructors should allow just enough time for all students to complete their tests thoroughly, not an infinite amount of time. Untimed power tests offer the most effective assessment of student intelligence and allow students to demonstrate their maximum potential, unrestricted by time limits and other external pressures. While tests must have some time limit, allowing ample time for all students to complete the test achieves the same effect as untimed power tests while being practical for real-world testing scenarios (Clemens, et al., 2014). Shorter tests and tests divided into sections separated by breaks or tested over multiple days benefit student performance by improving student engagement, reducing test fatigue, and decreasing pressure. In cases such as subjects based on quick recall or rote memorization like math multiplication tables, tests may be designed to test for speed. However, for most applications in areas of higher learning, i.e., the high school and university level, which focus testing on complex critical thinking and problem-solving skills, power tests offer the most effective assessment of student intelligence. These power tests allow students to demonstrate their maximum potential unrestricted by time limits and other external pressures.

The only conclusion then is that severe time restraints on exams do not effectively assess student knowledge or intelligence. Instead, they increase the disparities seen between advantaged and disadvantaged groups. Likewise, time limits increase the pressure and stress on students. This causes many groups to underperform, tests for different skills than those that determine real-world success, and affects certain demographics differently. This makes timed tests an unfair and ineffective means of evaluating student performance. Professors should therefore take steps to design shorter tests to fit within a longer time frame so that all students may have the best opportunity to succeed.

Appendix A

Appendix A. Correlation between general intelligence (g-factor) and reaction time (RT) for the easiest and hardest questions on the PMAT test.

Note: Figure reprinted from Schirner M., Deco, G., & Ritter, P. (2023). Learning how network structure shapes decision-making for bio-inspired computing. Nature Communications. https://doi.org/10.1038/s41467-023-38626-y.

Appendix B

Figure B1. Percentage of correct responses for each scoring group.

Figure B2. Percentage of correct responses for each scoring group with unanswered responses removed.

Figure B3. Percentage of students from each scoring group that answered a given question.

Note: Figures reprinted from Clemens, N.H., Davis, J. L., Simmons, L. E., Oslund, E. L., & Simmons, D. C. (2014). Interpreting Secondary Students’ Performance on a Timed, Multiple-Choice Reading Comprehension Assessment: The Prevalence and Impact of Non-Attempted Items. Journal of Psychoeducational Assessment, 33(2). https://doi-org.proxy.library.nd.edu/10.1177/0734282914547493.

Appendix C

Appendix C. Mean (standard deviation) scores of old vs. young adults for five different divergent thinking tests.

Note: Table reprinted from Foos P. W., & Boone, D. (2008). Adult Age Differences in Divergent Thinking: It's Just a Matter of Time. Educational Gerontology, 34(7), 587-594. https://doi.org/10.1080/03601270801949393.

Works Cited

Clemens, N.H., Davis, J. L., Simmons, L. E., Oslund, E. L., & Simmons, D. C. (2014). Interpreting Secondary Students’ Performance on a Timed, Multiple-Choice Reading Comprehension Assessment: The Prevalence and Impact of Non-Attempted Items. Journal of Psychoeducational Assessment, 33(2). https://doi-org.proxy.library.nd.edu/10.1177/0734282914547493.

Foos P. W., & Boone, D. (2008). Adult Age Differences in Divergent Thinking: It's Just a Matter of Time. Educational Gerontology, 34(7), 587-594. https://doi.org/10.1080/03601270801949393.

Gernsbacher M. A., Soicher R. N., & Becker-Blease, K. A. (2020). Four Empirically Based Reasons Not to Administer Time-Limited Tests. Translational Issues in Psychological Science, 6(2): 175–190. https://doi.org/10.1037/tps0000232.

Gladwell, M. (Host). (2019, June 27). The tortoise and the hare [Audio podcast episode]. In Revisionist History. Pushkin. https://www.pushkin.fm/podcasts/revisionist-history/the-tortoise-and-the-hare.

Grant, A. (2023, Sept. 20). The SATs will be different next year, and that could be a game-changer. The New York Times. https://www.nytimes.com/2023/09/20/opinion/culture/timed-tests-biased-kids.html.

Meada, Y., & Yoon Yoon, S. (2013). A Meta-Analysis on Gender Differences in Mental Rotation Ability Measured by the Purdue Spatial Visualization Tests: Visualization of Rotations (PSVT:R). Educational Psychology Review, 25, 69-94. https://doi.org/10.1007/s10648-012-9215-x.

Montolio, D., & Taberner, P. A. (2021). Gender differences under test pressure and their impact on academic performance: A quasi-experimental design. Journal of Economic Behavior & Organization, 191, 1065-1090. https://doi.org/10.1016/j.jebo.2021.09.021.

Schirner M., Deco, G., & Ritter, P. (2023). Learning how network structure shapes decision-making for bio-inspired computing. Nature Communications. https://doi.org/10.1038/s41467-023-38626-y.